VariTex:

Variational Neural Face Textures

Abstract

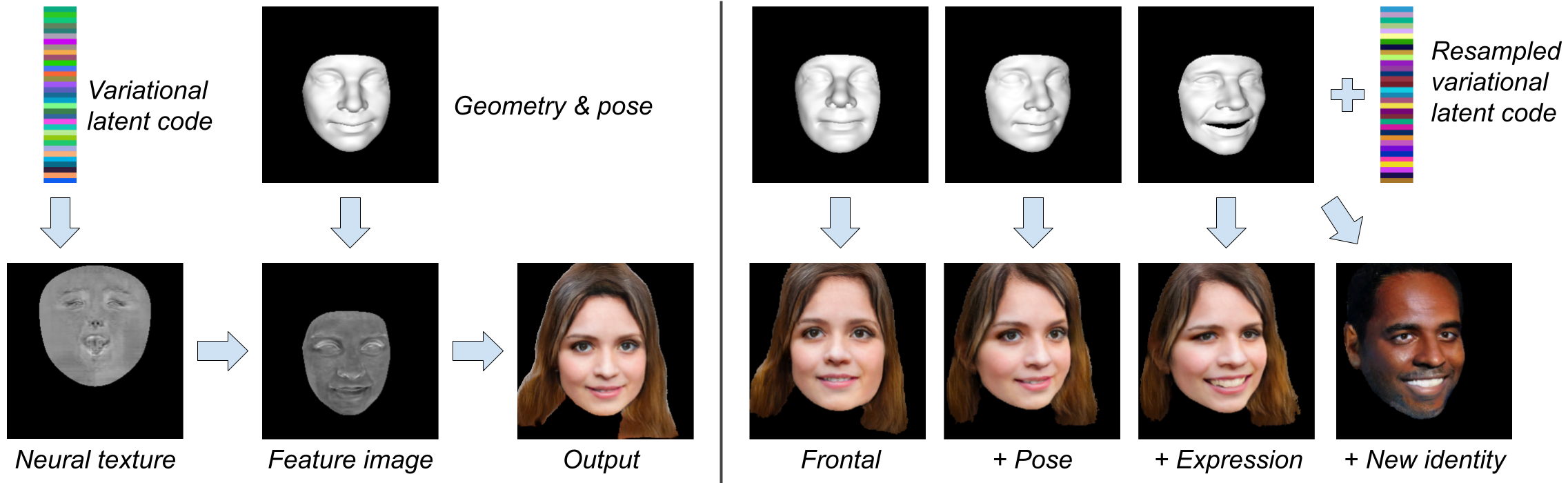

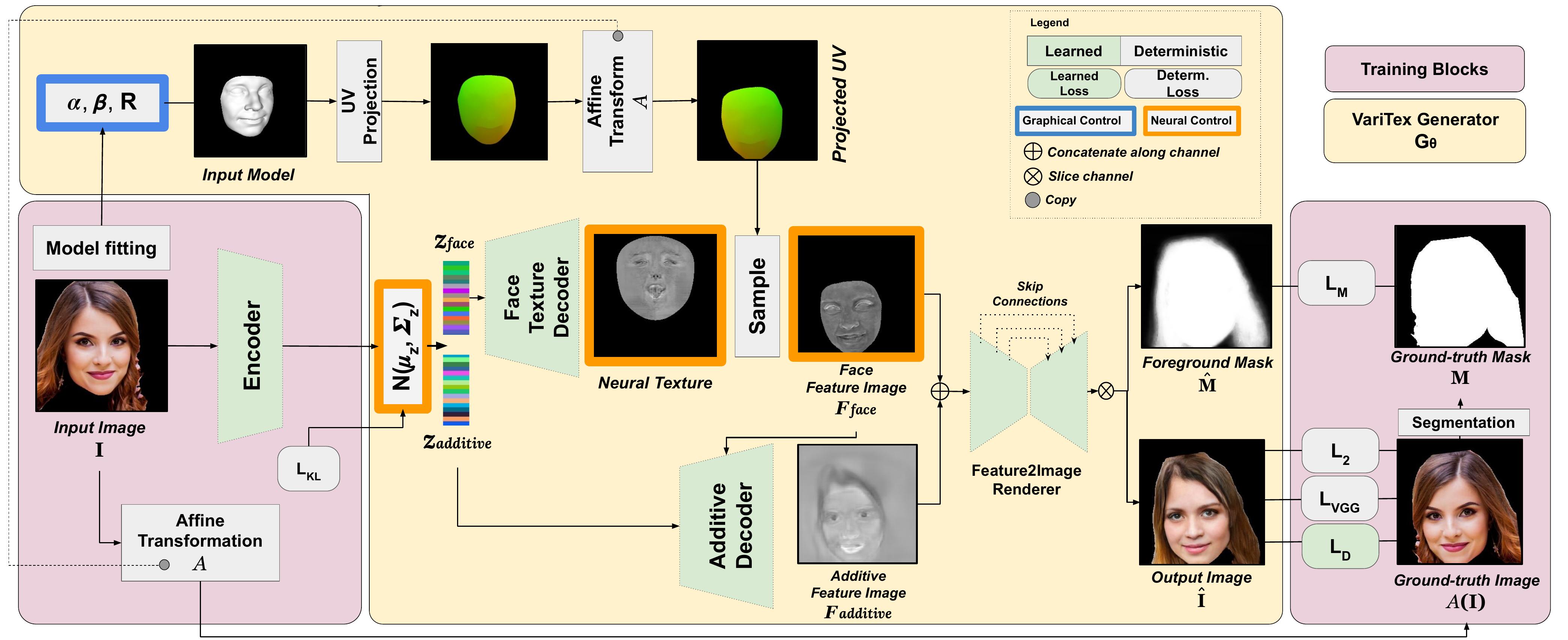

Deep generative models can synthesize photorealistic images of human faces with novel identities. However, a key challenge to the wide applicability of such techniques is to provide independent control over semantically meaningful parameters: appearance, head pose, face shape, and facial expressions. In this paper, we propose VariTex - to the best of our knowledge the first method that learns a variational latent feature space of neural face textures, which allows sampling of novel identities. We combine this generative model with a parametric face model and gain explicit control over head pose and facial expressions. To generate complete images of human heads, we propose an additive decoder that adds plausible details such as hair. A novel training scheme enforces a pose-independent latent space and in consequence, allows learning a one-to-many mapping between latent codes and pose-conditioned exterior regions. The resulting method can generate geometrically consistent images of novel identities under fine-grained control over head pose, face shape, and facial expressions. This facilitates a broad range of downstream tasks, like sampling novel identities, changing the head pose, expression transfer, and more.

Video

VariTex Controls

Expressions

Pose

Identity

Method

The objective of our pipeline is to learn a Generator that can synthesize face images with arbitrary novel identities whose pose and expressions can be controlled using face model parameters. During training, we use unlabeled monocular RGB images to learn a smooth latent space of natural face appearance using a variational encoder. A latent code sampled from this space is then decoded to a novel face image. At test time, we use samples drawn from a normal distribution to generate novel face images. Our variationally generated neural textures can also be stylistically interpolated to generate intermediate identities.

Citation

@inproceedings{buehler2021varitex,

title={VariTex: Variational Neural Face Textures},

author={Marcel C. Buehler and Abhimitra Meka and Gengyan Li and Thabo Beeler and Otmar Hilliges},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

year={2021}

}

BibTeX

Acknowledgements

We thank Xucong Zhang, Emre Aksan, Thomas Langerak, Xu Chen, Mohamad Shahbazi, Velko Vechev, Yue Li, and Arvind Somasundaram for their contributions; Ayush Tewari for the StyleRig visuals; and the anonymous reviewers. This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program grant agreement No 717054.